By Marc Owen Jones

Despite efforts by the EU and the U.S. governments to curtail Russian disinformation through sanctioning or blocking state-backed media such as RT and Sputnik News, thousands of fake Twitter, Facebook, and YouTube accounts are currently spreading Russian state propaganda and disinformation. In fact, some accounts do so in a coordinated fashion in both Mandarin Chinese and English, helping to peddle Beijing’s propagandistic narratives, too.

Through a variety of network analysis techniques, I identified between 2-7,000 fake Twitter accounts spreading Chinese- and English-language propaganda and disinformation since March 2022. Yet, the real number of fake accounts is likely to be much higher. The content includes videos, cartoons, and infographics that amplify a variety of pro-Beijing, pro-Moscow, antidemocratic, and anti-Western narratives. These Twitter accounts match many of the narratives used by fake accounts on Facebook and YouTube.

This network can help provide activists, civil society organizations, and democracies with unique lessons on how digital authoritarianism operates today, how the Chinese Communist Party (CCP) and Kremlin regimes amplify each other’s preferred narratives, and these operations’ impact on Chinese diaspora audiences.

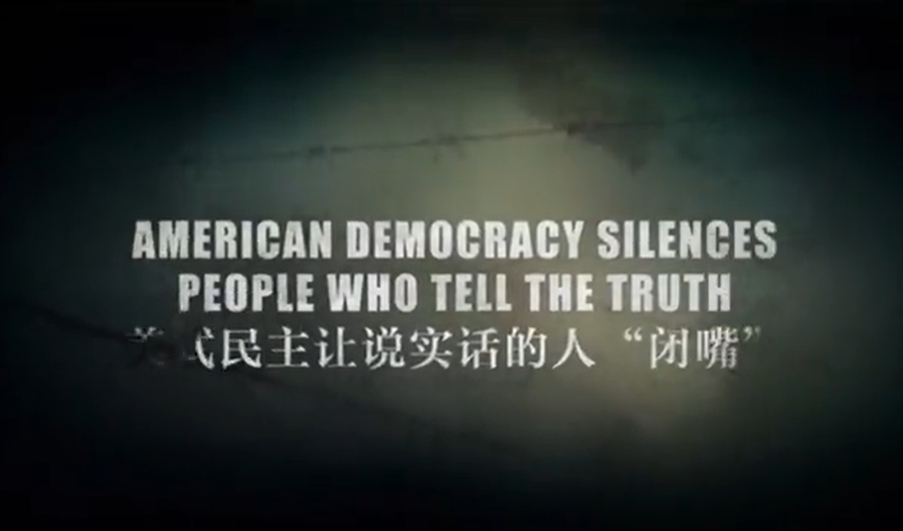

Screenshots collected by the author from “sockpuppet” (fake online accounts used by a person or group to promote a specific ideological agenda) Twitter accounts spreading pro-Beijing and pro-Kremlin propaganda.

What are these fake accounts saying?

Not all of these accounts that are dedicated to spreading propaganda are linked to Russia’s invasion of Ukraine; however, all tend to reflect pro-Beijing talking points on domestic and foreign policy, with content dating back several years. This network tends to press one or more of the following narratives:

- Attacking U.S. (and EU) foreign policy, with particular regard to their role in Ukraine, Taiwan, and protecting Chinese dissidents.

- Attacking Guo Wengui, an exiled Chinese businessman in the U.S. accused of corruption and major critic of General Secretary Xi.

- Praising the CCP’s response to the COVID-19 crisis, particularly in Hong Kong.

- Highlighting contentious societal issues in the U.S. presumably to provoke partisan division or sow international doubt or misgivings among China’s diaspora about the U.S. democratic system.

- Boosting PRC officials’ Twitter accounts and foreign accounts whose views align with the CCP’s preferred narratives.

The disinformation narratives concerning the U.S. accuse Washington of “pouring fuel” on the Ukraine crisis through its pursuit of NATO expansion and its decision to send arms to Ukraine. Many of these posts even accuse the U.S. government of being the “mastermind” behind the conflict that Putin initiated. Numerous posts also condemn U.S.-backed sanctions on Russia, framing them as damaging and counterproductive to both American and EU citizens—for instance, by claiming that sanctions will raise gas prices.

This content is also leveraging the work of U.S. pundits and politicians whose views can be seen to align with Russian narratives. Images and videos of American and European pundits and politicians were shared routinely.

By highlighting alternative but prominent voices, like Hungary’s President Victor Orban, who criticize the West’s increased role in Ukraine, these disinformation narratives amplify Kremlin-backed narratives and deflect attention away from Moscow’s increasing isolation.

Screenshot collected by author from a video tweeted by another sockpuppet account, criticizing U.S. democracy for falsely silencing those for criticizing the country’s pandemic response.

The same fake accounts that boost alternative voices and disinformation also provide a platform for CCP officials, including many PRC ambassadors, to perpetuate and bolster regime-approved narratives. The engagement these fake accounts receive from official figures online (through inflated numbers of “likes” and retweets) help legitimizes them from an average consumer’s perspective.

In line with Twitter’s basic algorithm to amplify content that other accounts engage with heavily, this behavior will inevitably amplify PRC officials’ narratives that spread pro-Russian propaganda. Below are just some examples of PRC officials whose content has been boosted by fake accounts.

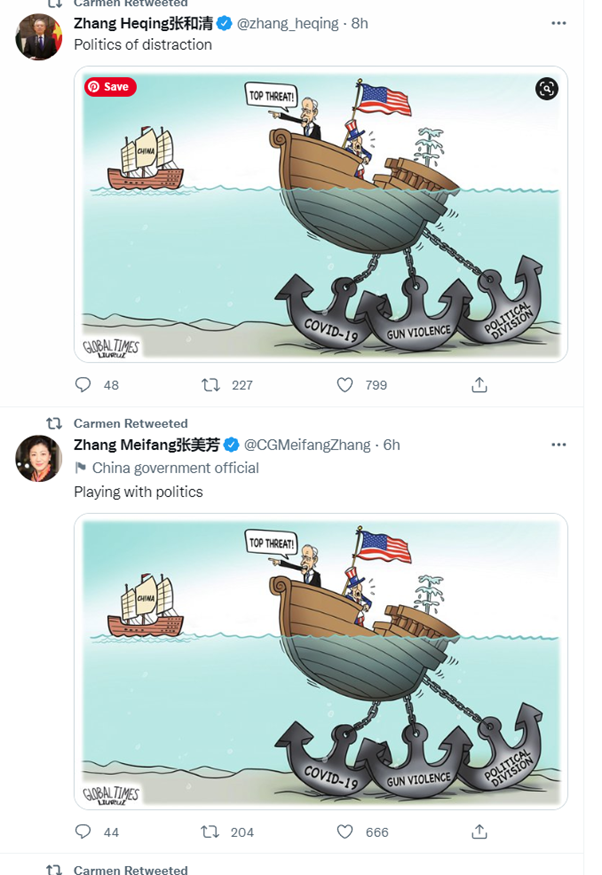

These two cartoons published in the Global Times, a PRC-affiliated newspaper, show President Biden using China as a distraction from domestic issues in the U.S. The cartoon was shared on Twitter by the PRC’s Consul General to Belfast, Zhang Meifang, and retweeted by sockpuppet accounts. Screenshots compiled by the author.

How the network operates

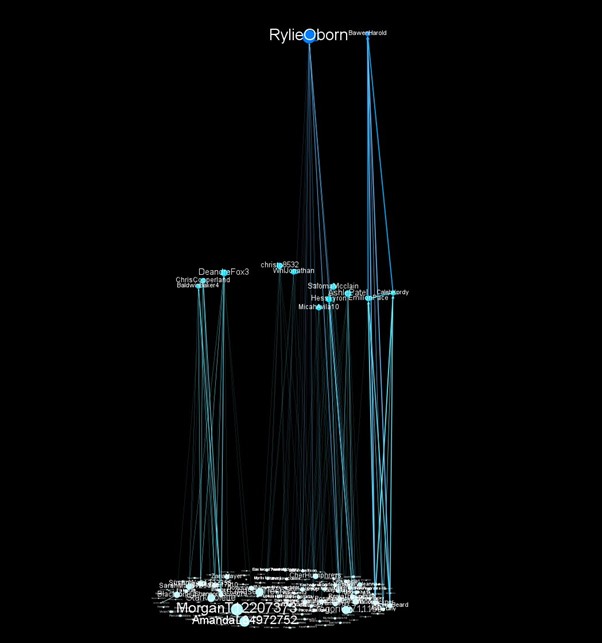

The fake accounts that make up this disinformation network follow a particular hierarchy in which only certain, key accounts produce original content. This content is then amplified by other accounts within the broader disinformation network. Tweets, posts, and other forms of content are subsequently boosted (retweeted and “liked”) by another network of “sub-accounts” that draw yet more attention to such content (by retweeting or replying to original material). Broadly speaking, this intricate web of mutually reinforcing content creation and amplification creates a tiered network that mirrors organic hierarchies in genuine Twitter networks. (Network analysis tools Gephi and NodeXL as well as the data analysis tool Tableau were used to explore the relationships between these accounts.)

This graph was generated using the network analysis tool Gephi and the algorithm Z Splitter, which divides the network into hierarchies based on specific characteristics, like relative influence. Here, the graph shows the “key accounts” at the top and “sub accounts” below.

Accounts that are part of these disinformation networks mostly have similar numbers of followers and accounts-followed, indicating a “reciprocal follow-back” network; in other words, accounts inflate their followers by agreeing to follow other accounts in return. Many of these accounts often lay dormant for several weeks before being activated suddenly to engage on a specific topic (for example, the war in Ukraine). There is also evidence to suggest the network eventually deletes its tweets, making its operation harder to track.

The majority of accounts in these disinformation networks, regardless of their hierarchy, appear to operate primarily on Twitter’s web-based app, and most of the accounts tweet a few aphorisms as their initial posts. Many of these accounts are old, dating from as early as 2006, and only have very few associated tweets, suggesting they have been either hacked or purchased and then repurposed to disseminate disinformation. As these accounts often use dormant profiles, many of their followers are real. Thus, these accounts’ followers have engaged with these profiles before they were turned into sockpuppet accounts; real people—not just other, fake follower accounts—are potentially being exposed to pro-PRC and pro-Kremlin propaganda.

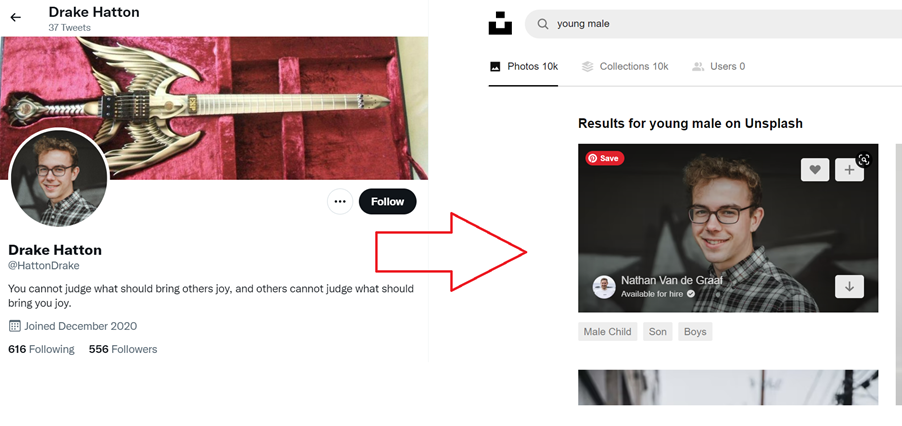

Reverse image searches discovered that those accounts that featured real human avatars used stolen photos. Take Drake Hatton below; his photo is actually a stock photo of a young man taken by a Norwegian photographer.

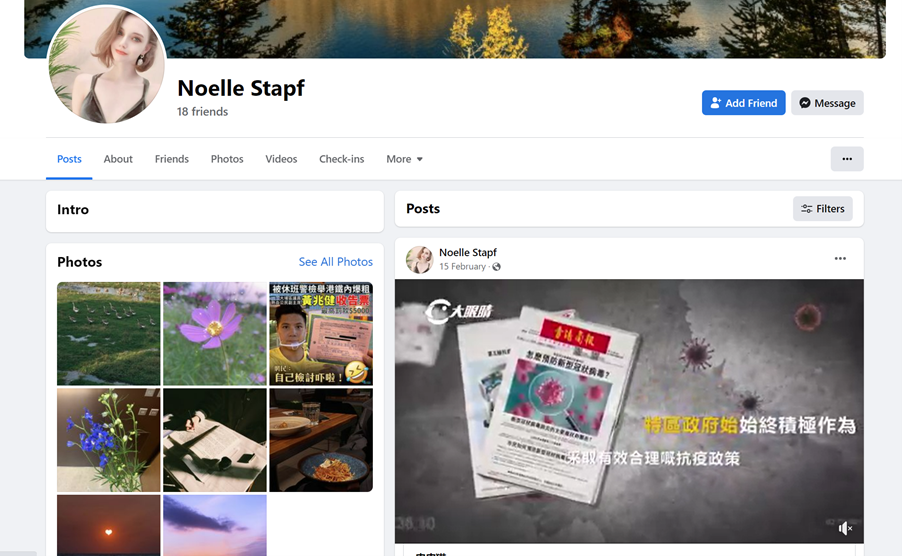

The network also appears to share content from across social media networks. A number of these accounts, for example, share videos linked to Facebook and YouTube accounts. Some of these Facebook accounts have identical banner profile pictures, with different profile images. Several have thousands of followers and receive regular engagement, although much of this attention appears to originate from other fake accounts.

Numerous fake Facebook accounts present images of Asian women with Western-sounding names to spread propaganda, enticing readers to believe and engage with their content. Screenshots collected by author.

The content shared on YouTube is often shared from what look like “burner accounts.” This type of account is set up quickly and anonymously for the sole purpose of sharing content (in this case, short videos) that targets governments or public figures or highlights contentious domestic political issues. Often, these posts are shared with the knowledge that they will eventually be deleted by the respective platforms’ content moderation operations.

What This Network Might Tell Us about Digital Authoritarianism

Digital authoritarianism is the use of digital technology, including social media platforms, by authoritarian regimes to surveil, harass, censor, intimidate, influence, and control both domestic and foreign populations. This mostly Mandarin Chinese and English-speaking social media network that openly peddles disinformation highlights the pervasiveness of pro-Beijing propaganda on various social media platforms. Specifically, analysis of the network itself and the narratives it promotes demonstrate that:

- Removing, labelling, or otherwise moderating authoritarian disinformation and propaganda on U.S. social media platforms still is fraught with difficulty. Old accounts can remain online or be purchased, dormant accounts can suddenly “come to life” in order to amplify propaganda, and authoritarians often use new circumvention techniques.

- Banning Russia Today, CGTN, or other state-affiliated media might only have limited effect in protecting Chinese-speaking diasporas from authoritarian narratives. Some Chinese-language networks on Twitter, Facebook, and YouTube are long-standing, heavily affiliated with PRC diplomatic accounts, and can be difficult to track at scale.

- Political synergies and tacit alliances between (likely) China- and Russia-sourced disinformation networks can amplify pro-Kremlin propaganda campaigns that easily obviate platforms’ attempts to de-amplify disinformation after the invasion of Ukraine. Pro-Russian narratives regarding Ukraine and other false narratives still creep into new audiences in Chinese and other languages.

- “Liberation technology”—the idea that digital technology and social media platforms could empower resistance to authoritarianism—has, in this case and in many others, not combated authoritarian governance, but facilitated it. Of course, this principle does not apply to just the PRC; authoritarian regimes on every continent are co-opting such platforms for the dissemination of propaganda and intimidation of activists.

Marc Owen Jones is an associate professor of Middle East Studies at Hamad bin Khalifa University in Doha and a senior non-resident fellow at Democracy for the Arab World Now (DAWN) as well as the Middle East Council for Global Affairs (MECGA). He is the author of the upcoming book, Digital Authoritarianism in the Middle East: Deception, Disinformation and Social Media, published by Hurst and Oxford University Press. Follow him on Twitter @marcowenjones.

The views expressed in this post represent the opinions and analysis of the authors and do not necessarily reflect those of the National Endowment for Democracy or its staff.

Image Credit: Golden Dayz / Shutterstock.com